[NOTES] CS 6515: Intro to Graduate Algorithms Recap

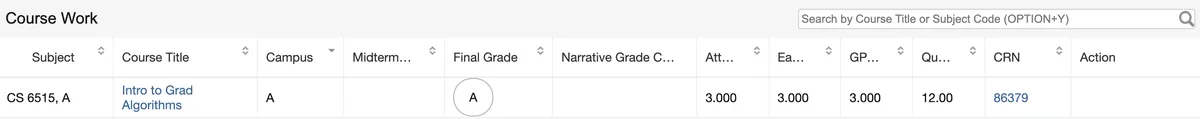

Update: Finally got an A in CS-6515.

You'll know soon enough what this class is about. I'll instead tell y'all what it's like to take this class:

- It's referred to as the "final-boss" of the MS CS degree at GaTech.

- To graduate, you need a minimum grade of B.

- A lot of students attempt this class twice (or more) to get a B.

- This class often leads to severe burnout and also many students change their specialization to completely avoid this class.

- This was the grade distribution in the summer (45.8% class basically either failed or dropped the class):

I have started writing this post on 5th December, 2024; five days before the CS-6515 final exam. Here are some reasons why I shouldn't be taking this exam:

- I already have a B in this class so effectively the final is optional.

- I start a new job soon after this exam. I could instead spend the time relaxing and doing fun things.

- No one will care about my final grade.

- Several of my classmates and TAs have advised against taking this exam because I need 85+ in the final exam to get an A. This is an especially hard target because the final exam covers the entire syllabus and hence, is significantly harder.

- I'm running on fumes after a year of studying. It's mentally arduous and I don't think I can study even for a minute now.

I'm left with few convincing reasons to take this exam. After an hour of contemplation these are the reasons why I WILL take the exam:

- I don't think I can score an 85+ in the finals. Attempting the final is futile. But I feel it is my duty to give my best and it would bring great honour to the legacy of Chatrapati Shivaji Maharaj, if I uphold my duties.

- I'm severely burnt out and I cannot continue studying. Or maybe this is a lie. Only one way to find out.

Note: These are meant to be rough/short-hand notes that will help me retain information that I've already studied in extreme depth previously. It's difficult for me to study right now because there isn't enough mental stimulation/challenge to enter a flow-state. Writing notes in LaTex and actively trying to summarize the syllabus slightly increases the challenge and only maximise the flow-state. To the general audience, these will not be very useful.

1. Notations

- Big-O notation: . Important:

- small-o notation: . Important:

- Big-Omega notation: . Important:

- small-omega notation: . Important:

2. Proofs by Induction

This is not gonna be directly tested but there are standard patterns in which proofs by induction that you can simply use in your exams.

- Base Case: Easiest part where you argue why algorithm is right for input size 1 or 0 (or whatever is base level input). You WILL get partial marks here (so don't fumble this)

- Hypothesis: Claim that the algo holds true for inputs up to size n.

- Induction Step:

- Pattern 1: Argue (argument 1) how problem changes with input input and how it can be solved correctly. Then use claim in step 2 to state that everything up to input size n works correctly hence, argument 1 + hypothesis is the required proof

- Pattern 2: Change input to and follow the previous method

- Pattern 3: Assume the opposite/contradiction and then show that algorithm works correctly without the contradiction and hence correct.

3. Master's Theorem

I just need to remember some basic results to solve short questions. This is mostly used to quickly figure out runtimes of some recursive functions.

- When we unroll a recursion tree then on level of the tree, the input size is .

- When we unroll a recursion tree then on level of the tree, the number of nodes/vertices are .

- When we unroll a recursion tree then on level of the tree, the total time spent is:

- Across all the layers and nodes the total time spent is:

There are three cases for Master's Theorem:

- :

- :

- :

4. Polynomial Multiplication and FFT

- Time Complexity: where is the degree of the polynomial

- Product Representation:

- Where and

- Data structure is roughly this:

- Imp Observation:

- ()

- Then coefficient of in is dot product of

- Some FFT Tricks:

- 3-SUM: You have 3 sets A, B and C. You have to find if sum of two elements from A and B can be found in C. Trick: . A is larger array and B is smaller array then for FFT we must flip B to get convolution (Call this ). For if coefficient of is non-zero in then 3-SUM output is true else false. TC:

5. DFS, BFS and Topological Order

- DFS/BFS Runtime:

- Topological Order: Order of vertices so that all edges go "left to right". Sorting by post-order in descending direction gives a valid topological order.

- Edge Types: (note that you will have to refer to the algorithm at the end of this section)

- Tree Edge: Edges used by DFS to recurse

- Back Edge: If you find an edge that connects to a vertex that HASN'T finished it's traversal then it's a back edge (if postorder[u] == 0 then it's a back edge where 'u' the neighbour of some vertex we are currently visiting.

- Forward Edge: (A,D) forward if A visited before D (preorder[u] > preorder[v])

- Cross Edge: (A,D) cross edge if A visited after D

- The algorithm roughly looks like this:

preorder = [0] * n

postorder = [0] * n

precounter = 0

postcounter = 0

visited = {}

def DFS(v):

nonlocal preorder, postorder, precounter, postcounter

visited[v] = True

preorder[v] = precounter

precounter += 1

for neighbour u of v:

if not visited[u]:

DFS(u) # Tree Edge

elif postorder[u] == 0:

# (v, u) is a backedge

elif preorder[u] > preorder[v]:

# (v,u) is forward edge

else:

# (v,u) is cross edge

postorder[v] = postordercounter

postordercounter += 1

- DFS/BFS: STACK/QUEUE.

- FILO: Algo behaves like a stack (DFS)

- FIFO: Algo behaves like a queue (BFS)

6. Search Algorithms

Note that these results can be directly used in exams.

- Theorem: Dijkstra's Algorithm finds the shortest path start vertex to EVERY OTHER VERTEX if the graph has only positive edge weights.

- Dijkstra's Algorithm uses distance as a priority in priority queue

- The algorithm is roughly this:

def Dijkstra(S):

queue.add((S, null, 0)) # current, parent and distance/priority

distance[S] = 0

while queue not empty:

v, parent, _ = queue.pop() # pop out the lowest priority value (shortest distance)

if visited[v]:

continue

visited[v] = True

distance[v] = distance[parent] + cost(parent, v)

for neighbour u of v:

queue.add(u, v, distance[v] + cost(v, u)) # v is parent and the last param is the priority

- Bottleneck path: Find path that allows carrying max amount of flow?

- Algo is roughly this:

def Bottleneck(S):

queue.add((S, null, 0)) # current, parent and distance/priority

distance[S] = 0

while queue not empty:

v, parent, _ = queue.pop() # pop out the highest priority value (largest edge)

if visited[v]:

continue

visited[v] = True

distance[v] = min(distance[parent], cost(parent, v))

for neighbour u of v:

queue.add(u, v, min(distance[v], cost(v, u))) # v is parent and the last param is the priority

- Min Spanning Tree of G is a spanning tree of G with smallest possible sum of edge weights

- PRIMs algo is roughly this:

def PRIM(S):

queue.add((S, null, 0)) # current, parent and distance/priority aint gonna be used

while queue not empty:

v, parent, _ = queue.pop() # pop out the highest priority value (largest edge)

if visited[v]:

continue

visited[v] = True

T = T union {v, parent}

for neighbour u of v:

queue.add(u, v, cost(v, u)) # v is parent and the last param is the priority

- A* Algo is some heuristic based search algo

- Algorithm is roughly this:

def AStar(S):

queue.add((S, null, 0)) # current, parent and distance/priority

distance[S] = 0

while queue not empty:

v, parent, _ = queue.pop() # pop out the lowest priority value (smallest heuristic value)

if distance[v] > distance[parent] + cost(parent, v):

distance[v] = distance[parent] + cost(parent, v) # found a new smaller path

else:

for neighbour u of v:

queue.add(u, v, distance[v] + cost(v, u) + heuristic(u_)) # v is parent and the last param is the priority

7. Matchings

- Definition: Augmenting path given M is a path consisting of

- Alternate matched and unmatched edges in G (Graph)

- First and Last vertices unmatched in M

- M is a max-matching iff there is no augmenting path

- We can find max-matching efficiently if we can find an augmenting path in polytime.

- How to find augmenting paths? Use Edmond's Blossom algorithm (implementation details not on the test)

- Theorem: We can find augmenting paths in a bipartite graph in O(|E| + |V|) time

- Max Matching in Bipartite graphs runtime: (Time to find a path using BFS * Max number of augmenting paths)

8. Max Flow and Min Cut

- Flow Definition:

- for all

- for all

- For all ,

- Max Flow problem is to find a flow that maximizes

- We can solve bipartite matching using max-flow (Add a source and sink vertex. Source connects to all vertices in bipartite graph vertex set A and sink connects to all vertices in bipartite graph vertex set B).

- Residual Graphs:

- Same vertices V

- Edges have capacity

- Edges with capacity

- Ford Fulkerson Algorithm:

- Start with

- Create residual graph

- Find s-t path in -- Break if no such path exists

- Update and go to step 2

- Runtime for Ford-Fulkerson:

- Theorem: Ford-Fulkerson returns the max-flow

- Theorem: Max-flow = Min-Cut

- There exists a polynomial time algorithm that finds max-st-flow in (This is called the Capacity Scaling algorithm)

- Capacity Scaling Algorithm is roughly as follows:

f = 0

delta = C # C is max capacity

while delta >= 1:

1. Construct Residual graph G^f(delta) where we remove edges of G^f with capacity < delta (keep only those edges that are ATLEAST delta amount)

2. Find st-path in G^f(delta)

2.1 f <- f + P*delta (go to 1)

3. Else if no st-path found:

3.1 delta <- delta / 2

return f

- Runtime of Capacity Scaling is: 9.

- Some observations:

- Each scaling operation of (Delta) performs augmentations. Time

- At the end of any scale ,

- if then it (capacity scaling) behaves like Ford-Fulkerson algorithm.

9. Linear Programming

- Feasible Region: Set of all points satisfying all constraints

- Halfspace: linear inequality

- Is feasible region of LP always connection? -> YES

- Equalities are allowed in LPs. Strict inequalities are not allowed!

- Definition of a Convex Region/Set: A set is CONVEX if for any we have

- Any LP can be solved in time that is polynomial in the number of input bits

- Will a poly sized LP always have a polysized optimal solution?

- YES

- Optimal solution is a "Corner" of feasible region.

- Theorem: For any LP, there exists some non-negative linear combination of constraints s.t.

- LHS = objective

- RHS = optimal value

10. LP Duality

- Primal LP Objective:

- Primal LP Constraints: and

- Primal LP has variables and constraints

- Dual LP Objective:

- Dual LP Constraints: and

- Dual LP has variables and constraints

- Weak Duality Theorem:

- Feasible for Primal LP

- Feasible for Dual LP

- Then optimal primal value is always less than or equal to optimal dual value

- Weak Duality Corollary:

- If we find feasible and feasible where then and are optimal

- If primal LP is unbounded then dual is infeasible and vice-versa

- Strong LP Duality Theorem:

- If primal LP value is finite, then optimal dual LP value is the same.

- OR: Primal LP is feasible and bounded iff dual LP is feasible and bounded.

- Farkas Lemma:

- There exists s.t. and is infeasible then there exists a s.t. and

- Farkas' lemma is a certificate of infeasiblity

- Theorem: There exists a polytime algorithm to check LP-feasibility then there exists a polytime algorithm for LP-Optimization

11. Dynamic Programming

Common Patterns on Test:

- LIS: Runtime is

def LIS(self, nums: List[int]) -> int:

L = len(nums)

dp = [1]*L

dp[0] = 1

max_dp = 1

for i in range(1, L):

for j in range(i-1, -1, -1):

if nums[i] > nums[j]:

dp[i] = max(dp[i], 1+dp[j])

max_dp = max(max_dp, dp[i])

return max_dp

- Max Independent Sets on Trees: Runtime is

M[v] = max{

1 + Sum([M(u) for u in grandchildOf(v)]), # includes the root

Sum([M(u) for u in childOf(v)]) # excludes the root

}

- Tip: For exam, present solution in form of table structure, base-case, induction-step and runtime-complexity.

12. Knapsack using Dynamic Programming

- Note that Knapsack is a NP-Hard problem and hence we don't think it's solvable in polynomial time.

- Polytime vs Pseudo-Polytime Algorithms:

- Polytime: Algorithms that are poly functions of input value

- Pseudo-Polytime: Algorithms that are poly functions of the numeric value of input

- Pseudo-Polytime algo for knapsack:

- Algo 1: Assume B is small (B = poly(n)) => all are small

- Algo runtime:

- Base: { if else }

- Inductive Step: max(, if )

- Answer:

- TC = Space Complexity =

- Algo 2: Assume all values are small. All

- Time Complexity = Space Complexity =

- Table Structure: = min total knapsack size that suffices to get exactly value using subset of jobs

- if not achievable using subset of jobs

- Base Case: { if else if }

- Inductive Step: min( if )

- Answer: max such that

- PTAS vs FPATS:

- PTAS (Poly Time Approx Scheme): approximation. Assume is a constant then you get a polytime algo

- FPTAS (Fully Poly Time Approx Scheme): approximation that is

- Knapsack FPTAS Algo:

Theorem: An algorithm that value optimization and has time

Step 1: Scaling factor

n = num items

v_max = max valued item

= precision

Step 2: Transformation Then change the values like so:

Step 3: Algorithm Use DP to find optimal solution for an instance with values (Algo 2 above)

Step 4: Runtime Time complexity is now:

This algo is polynomial in n and linear in

13. Markov Decision Process (MDP)

- Definition: Finite state space

- finite action space

- start state

- Transition Probabilities: for and

- Input:

- Rewards:

- Goal?: Given time horizon , design an algorithm (policy) that plays actions to maximize

- Policy Definition: An algorithm (policy) tells us what action to take at step

- Denote state of policy at step as

- We know, for any policy :

Okay, typing out notes got boring real quick as well. I need more mental stimulation. I will live-stream my suffering to further stimulate my brain. At this point I am sleeping only 3-4 hours. I am clearly mentally fatigued but let's fucking keep going fellas:

Now that the exam is done, here is what happened: I got an A:

We did it fellas. Note that I did not get an 85+. In fact, I just got an 82. My final grade was 89.37% and the cutoff for an A was 90%. However, more than 80% of the class finished the CIOS survey and hence the grade cutoff was relaxed by exactly one 1%. Everyone who had an 89% got an A.

Final boss of grad-school is defeated. Can't wait to get back to working on real world engineering problems now.